Proxmox VE Web GUI Pick NIC To Pass Through

Proxmox VE Web GUI Pick NIC To Pass Through

A quick one today is the super-simple tutorial for getting NICs passed through to virtual machines on Promxox VE. Passing-through NICs avoid the hypervisor overhead and also can help with compatibility issues using virtual NICs and some firewall appliances like pfSense and OPNsense. The downside is that unless the NICs support SR-IOV, they most likely will not be shared devices in this configuration.

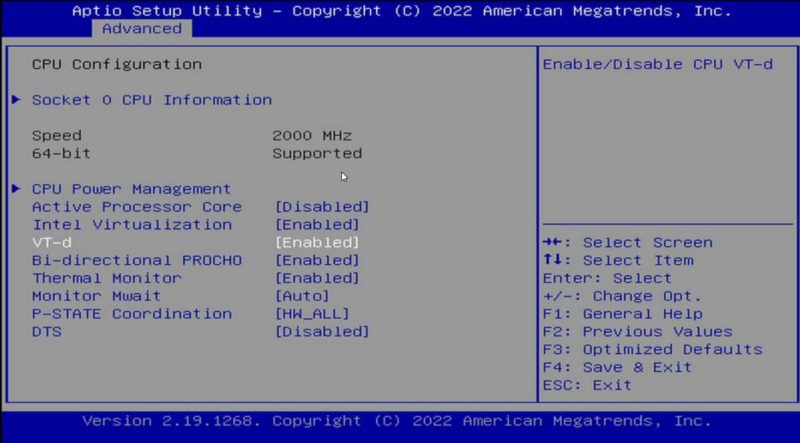

Step 1: BIOS Setup

The first thing one needs to do is to turn on the IOMMU feature on your system. For this, the CPU and the platform need to support the feature. These days, most platforms will support IOMMU, but some older platforms do not. On Intel platforms, this is called “VT-d”. That stands for Intel Virtualization Technology for Directed I/O (VT-d.)

Enable Intel VT D To Get IOMMU Working

Enable Intel VT D To Get IOMMU Working

On AMD platforms you will likely see AMD-Vi as the option. Sometimes in different system firmware, you will see IOMMU. These are the options you want to enable.

Of course, since this is Proxmox VE, you will want to ensure your basic virtualization is on as well while you are in the BIOS. Also, since it is going to likely be a main focus for people using this guide, if you are making a firewall/ router on the machine, we usually suggest setting the On AC Power setting to “Always on” or “Last state” so that in the event of a power failure, your network comes up immediately.

Next, we need to determine if we are using GRUB or systemd as the bootloader.

Step 2: Determine if you are Using GRUB or systemd

This is a newer step, but if you install a recent version of Proxmox VE, and are using ZFS as the root (this may expand in the future) you likely are using systemd not GRUB. After installation, use this command to determine which you are using:

efibootmgr -v

If you see something like “File(\EFI\SYSTEMD\SYSTEMD-BOOTX64.EFI)” then you are using systemd, not GRUB.

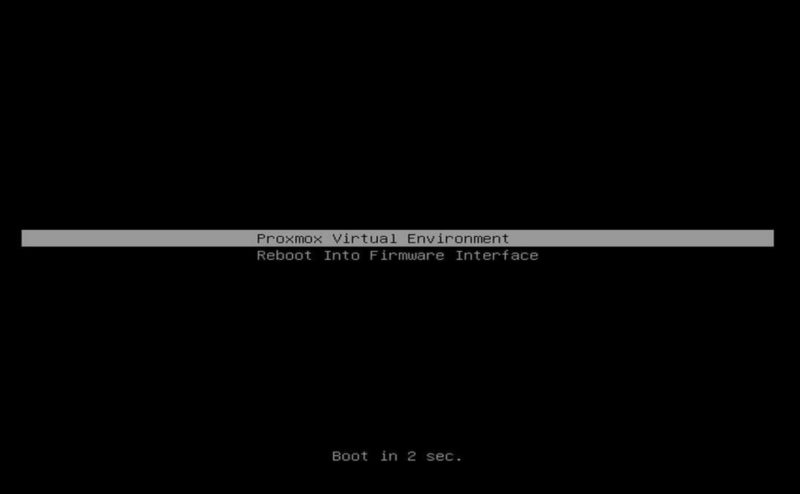

Another giveaway is when you boot, if you see a blue screen with GRUB and a number of options just before going into the OS, then you are using GRUB. If you see something like this, you are using systemd:

Proxmox VE Systemd Boot Menu

Proxmox VE Systemd Boot Menu

This is important because many older guides are using GRUB, but if you are using systemd, and follow the GRUB instructions, you will not enable IOMMU needed for NIC pass-through.

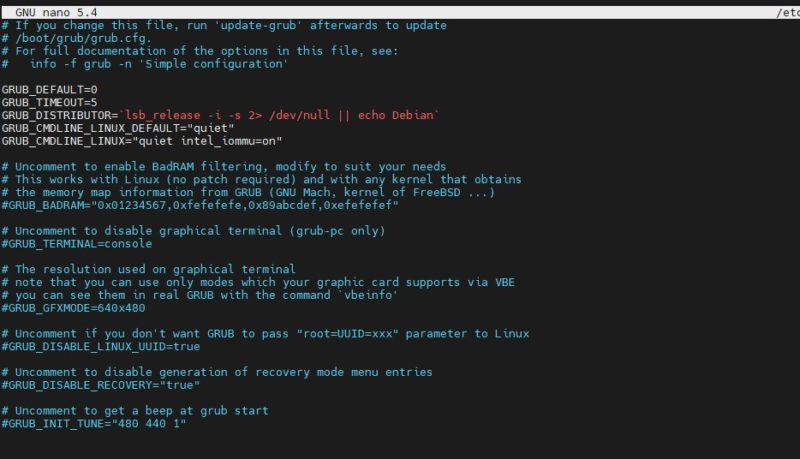

Step 3a: Enable IOMMU using GRUB

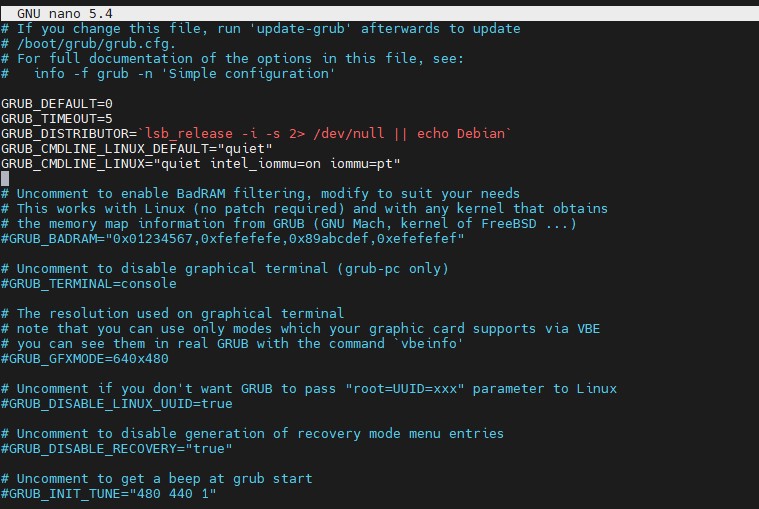

If you have GRUB, and most installations today will, then you will need to edit your configuration file:

nano /etc/default/grub

For Intel CPUs add quiet intel_iommu=on:

GRUB_CMDLINE_LINUX_DEFAULT="quiet intel_iommu=on"

For AMD CPUs add quiet amd_iommu=on:

GRUB_CMDLINE_LINUX_DEFAULT="quiet amd_iommu=on"

Here is a screenshot with the intel line to show you where to put it:

Proxmox VE Nano Grub Quiet Intel Iommu On

Proxmox VE Nano Grub Quiet Intel Iommu On

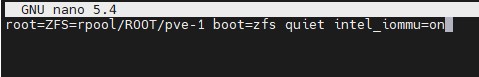

Optionally, one can also add IOMMU PT mode. PT mode improves the performance of other PCIe devices in the system when passthrough is being used. This works on Intel and AMD CPUs and is iommu=pt. Here is the AMD version, of what would be added, and we will have an Intel screenshot following:

GRUB_CMDLINE_LINUX_DEFAULT="quiet amd_iommu=on iommu=pt"

Here is the screenshot of where this goes:

Proxmox VE Nano Grub Quiet Intel Iommu On Iommu Pt

Proxmox VE Nano Grub Quiet Intel Iommu On Iommu Pt

Remember to save and exit.

Now we need to update GRUB:

update-grub

Now to go Step 4.

Step 3a: Enable IOMMU using systemd

If in Step 2 you found you were using systemd, then adding bits to GRUB will not work. Instead, here is what to do:

nano /etc/kernel/cmdline

For Intel CPUs add:

quiet intel_iommu=on

For AMD CPUs add:

quiet amd_iommu=on

Here is a screenshot of where to add this using the Intel version:

Proxmox VE Systemd Quiet Intel_iommu=on

Proxmox VE Systemd Quiet Intel_iommu=on

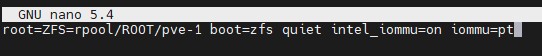

Optionally, one can also add IOMMU PT mode. This works on Intel and AMD CPUs and is iommu=pt. Here is the AMD version, of what would be added, and we will have an Intel screenshot following:

quiet amd_iommu=on iommu=pt

Here is the Intel screenshot:

Proxmox VE Systemd Quiet Intel_iommu=on Iommu=pt

Proxmox VE Systemd Quiet Intel_iommu=on Iommu=pt

Now we need to refresh our boot tool.

proxmox-boot-tool refresh

Now go to Step 4.

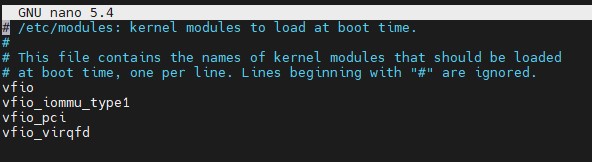

Step 4: Add Modules

Many will immediately reboot after the above is done, and it is probably a good practice. Usually, I like to add modules just to save time. If you are more conservative, reboot, then do this step. Next, you will want to add modules by editing:

nano /etc/modules

In that file you will want to add:

vfio

vfio_iommu_type1

vfio_pci

vfio_virqfd

Here is what it should look like:

Proxmox VE NIC Pass-Through Etc Modules Additions

Proxmox VE NIC Pass-Through Etc Modules Additions

Next, you can reboot.

Step 5: Reboot

This is a big enough change that you will want to reboot next. With PVE, a tip we have is to reboot often when setting up the base system. You do not want to spend hours building a configuration then find out it does not boot and you are unsure of why.

We will quickly note that we condensed the above a bit for more modern systems. If something fails in the verify step below, you may want to reboot before adding modules instead, and also not turn on PT mode before rebooting.

Step 7: Verify Everything is Working

This is the command you will want to use:

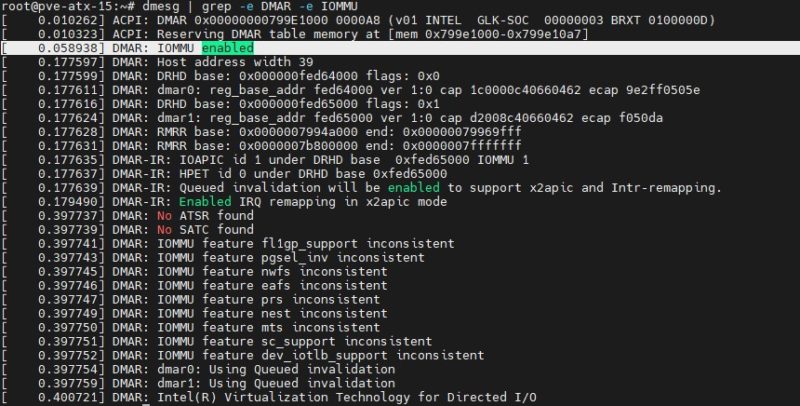

dmesg | grep -e DMAR -e IOMMU

Depending on the system, which options you have, and so forth, a lot of the output is going to change here. What you are looking for is the line highlighted in the screenshot DMAR: IOMMU enabled:

Proxmox VE IOMMU Enabled

Proxmox VE IOMMU Enabled

If you have that, you are likely in good shape.

Step 7: Configure Proxmox VE VMs to Use NICs

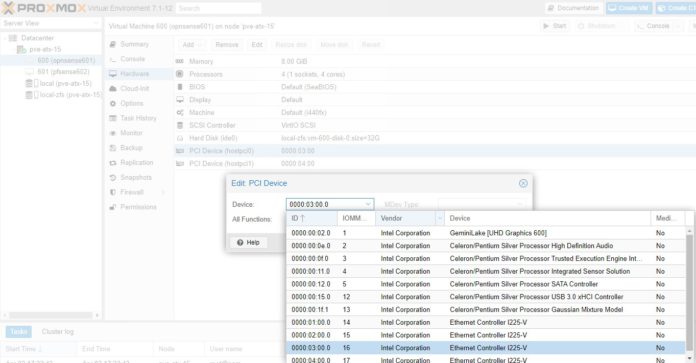

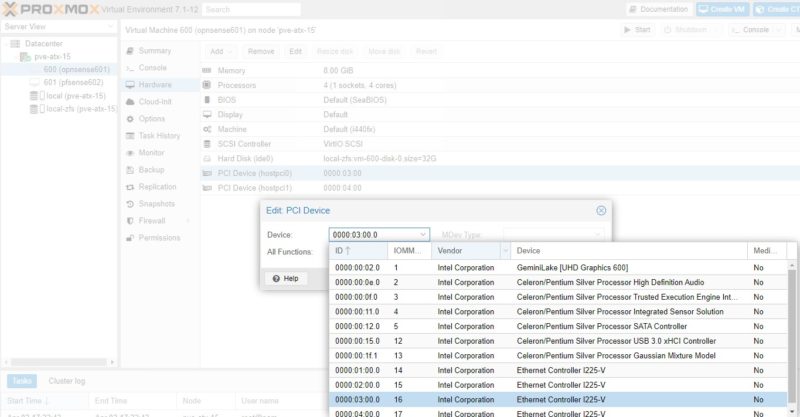

For this, we are using a little box very similar to the Inexpensive 4x 2.5GbE Fanless Router Firewall Box Review. It is essentially the same, just a different version of that box. One of the nice features is that each NIC is its own i225-V and we can pass through each individual NIC to a VM. Here is a screenshot from an upcoming video we have:

Proxmox VE Web GUI Pick NIC To Pass-Through

Proxmox VE Web GUI Pick NIC To Pass-Through

In the old days, adding a pass-through NIC to a VM was done via CLI editing. Now, Proxmox pulls the PCIe device ID and then also the device vendor and name. This makes it very easy to pick NICs in a system. One point that is nice about many of the onboard NICs is that the physical ordering as the NICs are labeled on the system should mean that we have sequential MAC addresses and PCIe IDs. In the above 0000:01:00.0 is the first NIC (ETH0). The device 0000:02:00.0 is the second, and so forth.

Hunsn 4 Port 2.5GbE I225 Intel J4125 Firewall Box NIC Ports

Hunsn 4 Port 2.5GbE I225 Intel J4125 Firewall Box NIC Ports

At this point, you are already done. You can see we have this working on both OPNsense and pfSense and the process is very similar. The nice thing is that by doing this, pfSense/ OPNsense have direct access to the NICs instead of using a virtualized NIC device.

A Few Notes on IOMMU with pfSense and OPNsense

After these NICs are assigned there are a few key considerations that are important to keep in mind:

- Using a pass-through NIC will make it so the VM will not live migrate. If a VM expects a physical NIC at a PCIe location, and it does not get it, that will be an issue.

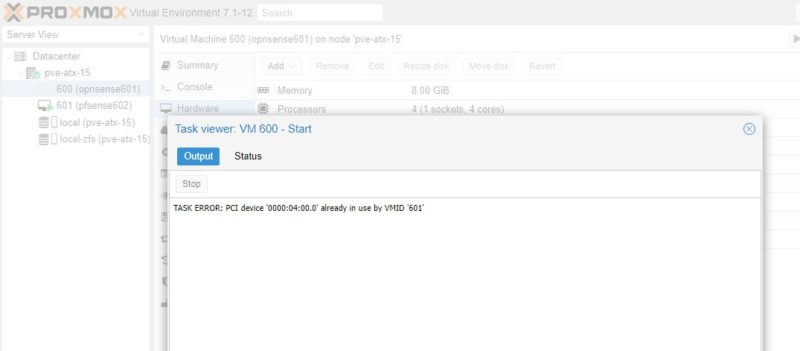

- Conceptually, there is a more advanced feature called SR-IOV that allows you to pass through a NIC to multiple devices. For lower-end i210 and i225-V NICs that we commonly see in pfSense and OPNsense appliances, you will be conceptually dedicating the NIC to the VM. That means, another VM cannot use the NIC. Here is an example where we have the pfSense VM (600) using a NIC that is also assigned to the OPNsense NIC. We get an error trying to start OPNsense. The Proxmox VE GUI will allow you to configure pass-through on both VMs if they are off, but only one can be on and active with the dedicated NIC at a time.

Proxmox VE Web GUI NIC Being Assigned To A Second VM for OPNsense when it is already assigned to pfSense

Proxmox VE Web GUI NIC Being Assigned To A Second VM for OPNsense when it is already assigned to pfSense

- Older hardware may not have IOMMU capabilities. Newer hardware has both IOMMU and ACS, so most newer platforms make it easy to separate PCIe devices and dedicate them to VMs. On older hardware, sometimes how PCIe devices are grouped causes issues if you want to, as in this example, pass-through NICs separately to different VMs.

- You can utilize both virtual NICs on bridges along with dedicated pass-through NICs in the same VM.

- At 1GbE speeds, pass-through is not as big of a difference compared to using virtualized NICs. At 25GbE/ 100GbE speeds, it becomes a very large difference.

- When we discuss DPUs, one of the key differences is that the DPU can handle features like bridging virtual network ports to physical high-speed ports and that happens all on the DPU rather than the host CPU.

- This is an area where it takes longer to setup than a bare-metal installation, and it adds complexity to a pfSense or OPNsense installation. The benefit one gets is that doing things like reboots is usually much faster in the virtual machine. One can also snapshot the pfSense or OPNsense image in the event one makes a breaking change.

- We suggest having at least one more NIC in the system for Proxmox VE management and other VM features. If one uses pass-through for all NICs to firewall VMs, then there will not be a system NIC.